Part 1 of the “LLM Production Pipeline” Series

I’ll never forget the first time I got an LLM to work locally. I was messing around with HuggingFace’s transformers library, running a sentiment analysis model on some text data. The model actually worked! It classified my test sentences correctly, the output looked reasonable, and I felt accomplished ☺️!

The timing was kind of perfect, actually. My team had been talking about adding some LLM capability to one of our features for a few sprints. We kept putting it in the backlog, deprioritizing it, moving it around. You know how it goes. Eventually, my tech lead asked if anyone wanted to spike it out, just to see if it was even feasible.

I volunteered. I mean, I’d just spent a weekend playing with transformers anyway, so why not? Plus, it sounded interesting, and I wanted to learn more about LLMs in a work context.

I spent a few days tinkering in a Jupyter notebook. Got a basic prototype working. Showed it to a few people on my team during a sync. Everyone thought it was pretty cool! The PM got excited. My manager seemed interested. Someone even said “this is exactly what we need.”

Then sprint planning rolled around the next week.

“Okay, so the spike is done,” the PM said, pulling up the ticket. “How many story points would it take to actually build this properly?”

I stared at my screen. My brain went completely blank.

“Uh… more than 5?” I said. “Maybe 13? Honestly, I have no idea.”

And I really didn’t. Because I had absolutely no clue what “properly” even meant in this context. My prototype was a 150-line Jupyter notebook with hardcoded API keys and string manipulation. It was obvious that my current notebook was a mess and not ready to be in any production environment. How much work was between “it works in my notebook” and “this is production-ready”?

Spoiler alert: it takes a lot of work.

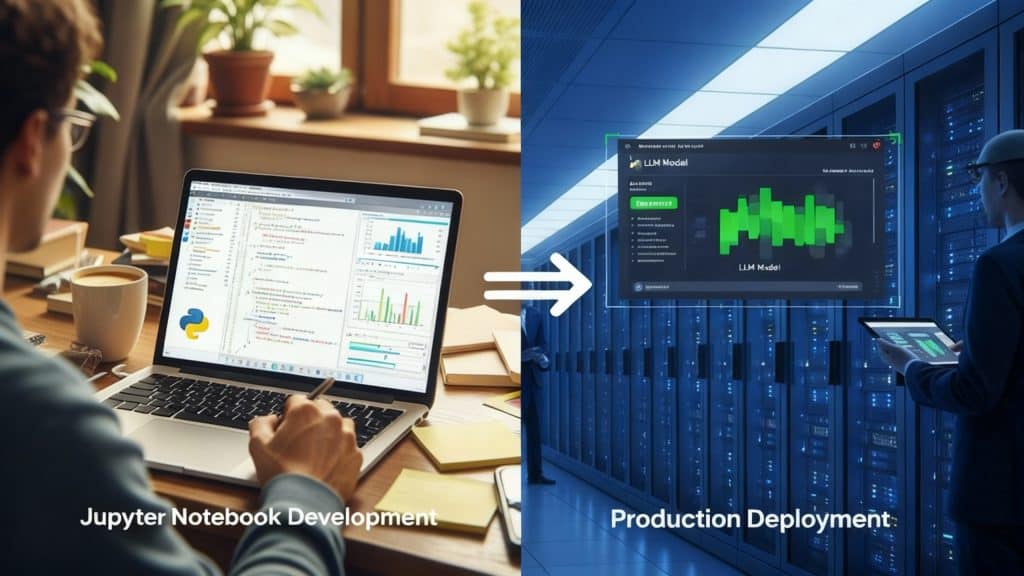

This isn’t a criticism of notebooks. I love notebooks! They’re perfect for experimentation, quick iterations, and showing your work. But there’s a massive gap between “it works in my notebook” and “this is ready to serve real traffic.” And if you come from a data science or research background, you might not even know what that gap looks like yet.

This blog series is about bridging the gap. We’re going to take a messy notebook with LLM code (the kind we all write when we’re just trying to make something work) and transform it into a production-ready system. No hand-waving, no shortcuts. We’ll cover type safety, testing, self-hosting, deployment, and all the stuff that separates a prototype from a product.

But before we dive into solutions, we need to honestly assess the problem. Let’s look at what makes notebook code so problematic for production systems.

The Mess We’re Starting With

Here’s what a typical “I just need to get this working” LLM notebook looks like. I’m being generous here because this is actually cleaner than some notebooks I’ve seen (and definitely cleaner than some I’ve written 🤭):

from openai import OpenAI

import json

# Set up

client = OpenAI(api_key="sk-proj-NONE-YA-BUSINESS") # TODO: move this later

def analyze_reume(resume_text):

prompt = f""" You are a resume analyzer. Extract the following information

- Candidate name

- Years of experience

- Skills (as a list)

- Education

Resume:

{resume_text}

Return the results as JSON.

"""

response = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

temperature=0

)

result = response.choices[0].message.content

return json.loads(result)

# Test it out

sample_resume = """

John Smth

Senior Software Engineer with 8 years of experience...

"""

ouput = analyze_reume(sample_resume)

print(ouput)At first glance, this might seem fine. It’s only 30 lines of code! It works! You can run it, get results, and show your team. What’s the problem?

Well, let me show you.

Six Problems Hiding in Plain Sight

Problem 1: No Type Safety

Quick question: what does analyze_resume() return?

If you said “a dictionary,” you’re technically correct. But what keys are in that dictionary? What are their types? Is years_of_experience an int or a string? Is skills a list of strings or a comma-separated string?

You have to read the prompt, hope the LLM returns what you asked for, and pray that it’s consistent. There’s no contract, no schema, no guarantee. Your IDE can’t help you with autocomplete because it has no idea what you’re working with.

I’ve run into this exact issue before. I had a function that was supposed to return a structured response from an LLM, and I just assumed experience_years would be an integer. Made sense, right? Turns out the LLM sometimes returned “5 years” as a string, sometimes “5” as a string, and occasionally (I kid you not) “five” spelled out. My downstream code that tried to do if experience_years > 3 crashed in three different ways depending on which format showed up.

Problem 2: Configuration Management (The Security Nightmare)

See that API key on line 5? That’s a production incident waiting to happen.

Let me tell you a story. Early in my career at one of my previous companies, I was reviewing a PR from someone on my team. The code looked fine, tests passed, and everything seemed great. Then I noticed it: a HuggingFace API token hardcoded right there in the Python file.

“Did you push this to the repo?” I asked.

“Yeah, but I changed it right after!”

My stomach dropped. “How many commits ago?”

“Like, two commits back.”

Here’s the thing most people don’t realize (I didn’t know this either when I was starting out): Git doesn’t forget. Even if you change a secret in a later commit, it’s still sitting there in your repo’s history. Anyone who clones the repository can see it with a simple git log command. And if your repo is public (or gets accidentally made public), that secret is now out in the wild.

GitHub has secret scanning, but it’s not instantaneous. By the time you get the notification email, there are bots that have already scraped your token and added it to a database somewhere.

Right now, there’s probably a data scientist somewhere waking up to a $3,000 OpenAI bill because a bot found their exposed API key in a public repo and has been hammering GPT-4 endpoints for the past 12 hours. The hacker running that bot doesn’t care about your project or your career. They just want free compute, and your leaked token is their golden ticket.

What to do if You’ve Leaked a Secret

- Revoke the key immediately

- Report the incident

- Generate a new one

- Check your billing ASAP (OpenAI keys, AWS keys, anything that costs money)

- Use

git filter-branchor BFG Repo-Cleaner to remove it from history - Force push (coordinate with your team first so you don’t mess up their local repos)

- Learn from it

The Right Way to Handle Secrets

import os

from dotenv import load_dotenv

from openai import OpenAI

load_dotenv()

# OpenAI() automatically reads OPENAI_API_KEY from environment

client = OpenAI()

# Optional: verify it's set

if not os.getenv("OPENAI_API_KEY"):

raise ValueError("OPENAI_API_KEY environment variable not set")And add .env to your .gitignore file. Always. Every single time. No exceptions. I have this muscle memory now where if I create a new repo, .gitignore is literally the first file I create.

Problem 3: No Testability

How do you test the analyze_resume() function? Right now, you can’t. Well, you can, but you’d have to actually call the OpenAI API every single time you run your tests. And that creates a cascade of problems:

- It costs money (every test run hits your API bill)

- It’s slow (GPT-4 responses can take several seconds each)

- It’s non-deterministic (same input, potentially different outputs)

- It’s fragile (tests fail when OpenAI has an outage, which happens…a lot)

The function is tightly coupled to the external API. There’s no way to test your logic without actually calling OpenAI. This means your tests are expensive, slow, and flaky. That’s the trifecta of bad tests. Your CI/CD pipeline would take forever to run, cost a fortune, and randomly fail for reasons that have nothing to do with your code.

Problem 4: String Manipulation Hell

Look at that prompt construction again:

prompt = f"""You are a resume analyzer. Extract the following information:

- Candidate name

- Years of experience

...

"""This is fine for a quick prototype. But what happens when:

- You need to reuse this prompt logic in three different places?

- You want to A/B test different prompt versions?

- The prompt needs to be translated for different languages?

- You want to keep track of which prompt version produced which results?

- Someone else on your team needs to tweak the prompt format?

Right now, your prompt is just a string buried in a function. You can’t version it, can’t test different variations systematically, can’t share it across files without copy-pasting. And if you have multiple prompts scattered across your codebase (which you inevitably will), good luck keeping them consistent or even figuring out which ones are actively being used.

I’ve been in codebases where we had seven different versions of basically the same prompt because everyone just copied and tweaked the string instead of having a centralized place for prompt templates. When we needed to change something fundamental, we had to hunt through the entire repo. Not fun.

Problem 5: No Error Handling

What happens when:

- OpenAI’s API is down?

- You hit rate limits?

- The response isn’t valid JSON?

- The model returns

nullfor a required field? - Your request times out after 30 seconds?

Right now, the answer is: your code crashes. Maybe you get a stack trace if you’re lucky. Maybe your whole service goes down. Maybe you lose data. Who knows!

# This will crash if the LLM doesn't return valid JSON

return json.loads(result)

# This will crash if the API is down

response = client.chat.completions.create(...)

# This will crash if you hit rate limitsThere’s no retry logic, no fallback behavior, no graceful degradation. Just exceptions waiting to blow up your application. And in production, that’s not just inconvenient or embarrassing, it’s legitimately dangerous to your service’s reliability.

Problem 6: No Observability

When something goes wrong in production (and trust me, it will), how do you debug it?

Your current notebook has a print statement. That’s it. You have no logs, no metrics, no tracing, no way to answer questions like:

- How long do requests actually take?

- Which prompts are performing poorly?

- What’s our error rate?

- Which inputs consistently cause failures?

- How much are we spending on API calls?

You’re basically flying blind. I’ve been pulled into emergency debugging sessions where a service was failing intermittently, and let me tell you, having zero visibility into what’s happening is genuinely awful. You end up adding a bunch of print statements, redeploying, waiting for it to fail again, checking logs, and repeating that cycle until you find the issue. It’s tedious, time-consuming, and totally avoidable.

What Does “Production-Ready” Actually Mean?

I’ve thrown around the term “production-ready” a lot, but what does it actually mean? Because I’ve seen people interpret it very differently depending on their background.

For some teams, “production-ready” means “it runs without crashing most of the time.” For others, it means “it meets all 47 requirements in our compliance checklist and has been blessed by three different committees.”

Here’s my definition: production-ready code is code that you wouldn’t panic about if you got a Slack message saying it broke while you were in a meeting or away from your desk.

That means:

- Reliable (it handles errors gracefully and recovers from failures)

- Observable (you can see what it’s doing and diagnose problems quickly)

- Maintainable (other people can understand and modify it, including future you)

- Testable (you can verify it works without manual clicking around)

- Secure (it doesn’t leak secrets or expose vulnerabilities)

- Performant (it can handle your expected load, plus some buffer)

Notice I didn’t say “perfect.” Production-ready doesn’t mean bug-free. It means you’ve thought through the failure modes and built in safeguards. You’ve planned for things to go wrong, because they will.

Where We’re Going in This Series

Over the next three posts, we’re going to systematically fix every problem I just outlined. Here’s the roadmap 👇🏾

Post 2: Treating Prompts Like Code with BAML

We’ll introduce BAML (a domain-specific language for LLM applications) and use it to add type safety and structure to our prompts. You’ll learn why treating prompts like untyped strings is asking for trouble, and how to build contracts that actually enforce what you expect.

Post 3: Testing Your LLM Applications (Without Going Broke)

We’ll build a proper testing strategy that doesn’t require calling OpenAI’s API for every single test. You’ll learn about mocking, test doubles, and how to test LLM applications in a way that’s both thorough and actually maintainable.

Post 4: Self-Hosting LLMs with vLLM

We’ll set up vLLM (a high-performance inference engine) to self-host open-source models. You’ll learn when self-hosting makes sense, when it doesn’t, and how to actually do it with Docker and GPU support.

Post 5 (Bonus Post): The Complete Production Pipeline

This post takes everything from parts 1-4 and puts it into production with automated CI/CD pipelines, proper monitoring, and deployment strategies. You’ll learn three deployment approaches: blue-green, canary, and rolling. Plus I’ll include runbooks for handling the inevitable issues. Basically, it’s the difference between running docker-compose up on your laptop and serving real user traffic in the cloud.

By the end of this series, you’ll have transformed a messy notebook into a production system that you’d actually want to maintain. More importantly, you’ll understand the principles behind each decision, so you can apply them to your own projects.

Homework: Audit Your Own Code

Before moving on to the next post, I want you to do an honest audit of your own LLM code (if you have any). If you don’t have LLM code yet, grab any data science notebook you’ve written recently. This exercise works for basically any prototype code.

Go through and honestly assess these six problems:

- Type safety: Can you tell what your functions return without running them?

- Configuration: Are any secrets hardcoded? Did they ever make it into version control?

- Testability: Can you test your logic without hitting external APIs?

- String manipulation: Are your prompts scattered throughout your code as raw strings?

- Error handling: What actually happens when things go wrong?

- Observability: If this broke while you were away, could you figure out why within 15 minutes?

Be brutally honest with yourself. I’m not here to judge (I’ve written plenty of questionable notebook code over the years). But you can’t fix problems you don’t acknowledge. And the sooner you recognize these patterns, the easier it becomes to avoid them in future projects.

Write down what you find. Take notes on which problems show up most often in your code. We’re going to systematically address each one over this series.

In the next post, we’ll tackle problems 1 and 4 by introducing BAML and treating our prompts like the first-class citizens they deserve to be. We’ll add type safety, structure, and actual sanity to our LLM applications. No more crossing your fingers and hoping the output is what you expect.

See you there 🚀!